SPLASH - a problematic trial design for an active Lutetium-PSMA product in metastatic castrate-resistant prostate cancer

Presented at ESMO, the compound shows activity; however, the trial design is problematic.

During the European Society for Medical Oncology (ESMO) annual meeting in 2024, results from the SPLASH trial were presented. SPLASH is evaluating a Lutetium-PSMA product in patients with PSMA-positive, metastatic castration-resistant prostate cancer. The product demonstrated activity (i.e., tumor shrinkage, with an overall response rate of 38.1%). However, the SPLASH Phase 3 trial was supposedly designed to demonstrate efficacy compared to the current standard of care (i.e., does it translate into better clinical outcomes?). The primary endpoint of SPLASH - radiographic PFS - was improved with 177Lu-PNT2002. Here, I highlight issues with the trial design and analyses in SPLASH.

Unethical control arm: enough is enough

ARPI switch: what is that?

ARPIs are second-generation androgen inhibitors, such as abiraterone (ABI) or enzalutamide (ENZA). To enter the SPLASH trial, patients must have previously received one of these two. However, upon enrollment in the trial, patients in the control arm were given the other ARPI. Those who had received ABI were then given ENZA, or vice versa. The issue is that ABI followed by ENZA has limited efficacy, while ENZA followed by ABI shows almost none (see this publication)

In other words, this ARPI-switch control arm is suboptimal, inadequate, and not reflective of the current standard of care. If any proof of this is needed, we can simply look at the response rate of patients in the control arm under the ARPI-switch treatment, which is 12% in SPLASH, demonstrating the minimal activity of this approach.

While 177Lu-PNT2002 was competing against a poor comparator, it is little surprise that the primary endpoint—radiographic PFS—was improved.

Problematic cross-over

In SPLASH, a cross-over was incorporated into the trial design. This means that patients in the control arm could receive the experimental 177Lu-PNT2002 product upon radiographic progression. As a result, 85% of patients in the control arm received it after progression.

Is this a good approach?

Such a crossover would be desirable if the new product had already been proven to be beneficial compared to other options, which is not the case, as this is the core question of the trial. If the product turns out not to be beneficial, patients in the control group would first receive a suboptimal control arm (ARPI-switch) and then the 177Lu-PNT2002 product, further delaying their access to potentially life-prolonging therapies such as taxanes.

This concept of when crossover is desirable and when it becomes problematic is key in oncology and is best explained here.

In SPLASH, I am concerned that patients were eager to enroll despite knowing the control arm was suboptimal, likely because they knew they could access the “new drug” later through the crossover. However, as we have seen, this crossover was problematic in itself.

P-hacking in survival sensitivity analyses ?

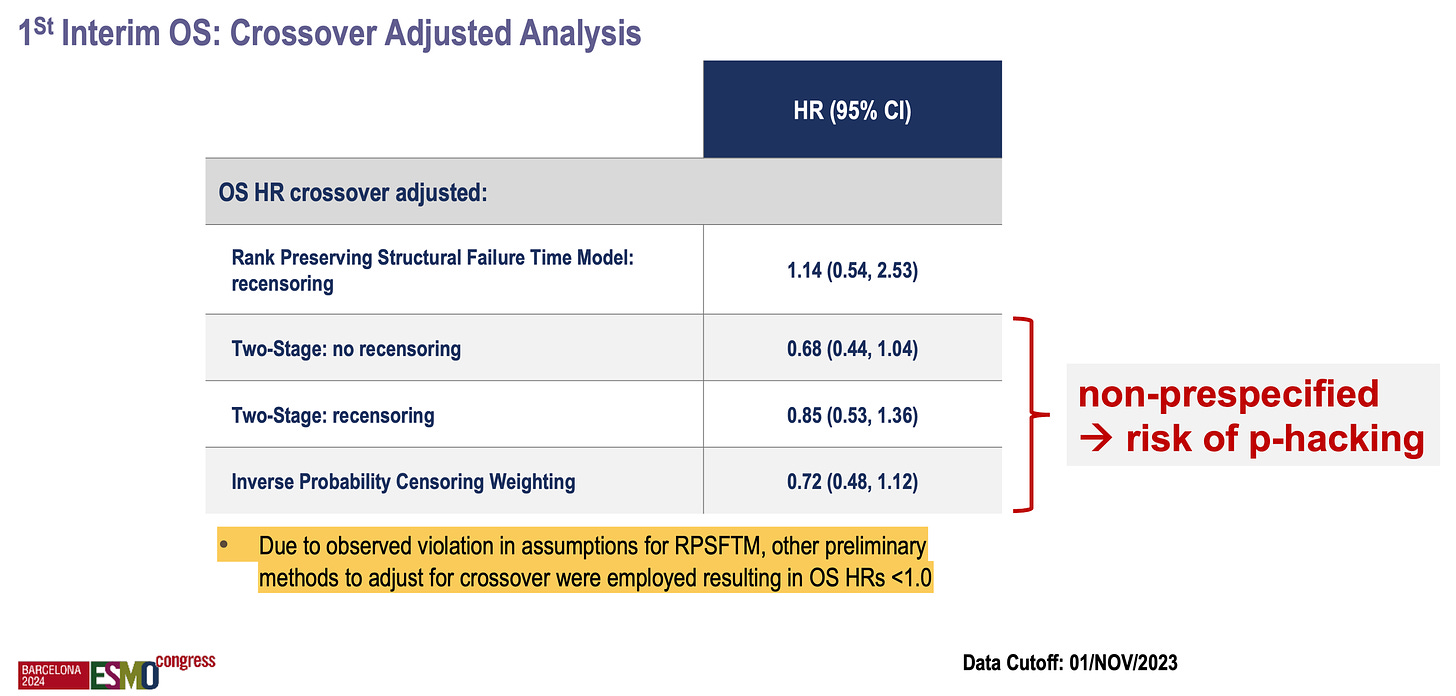

The first interim overall survival analysis in SPLASH, although not significant or mature, raises the possibility of a decrement with the new therapy (HR = 1.11, 95% CI: 0.73–1.69).

However, because the trialists knew that a high rate of patients would “cross over”, they pre-specified a test called RPSFTM (rank preserving structural failure time model). I won’t delve into the statistical details, but this analysis is intended to “correct” for crossover and attempt to isolate the effect of the drug on survival “had the crossover not occurred”. Interestingly, in a paper by Vinay Prasad, Sunny Kim, and Alyson Haslam, they noted that this type of analysis is often inappropriately used and tends to favor the experimental therapy…

… however, that was not the case here: the RPSFTM produced a worse hazard ratio (HR = 1.14) compared to the original one (HR = 1.11), with both tending to favor the control arm!

Based on “observed violations in assumptions for RPSFTM”, the investigators conducted other types of non-prespecified sensitivity analyses, all of which favored the experimental therapy! P-hacking or data dredging involves repeatedly analyzing the data until favorable results are found and reported. I am concerned that this may have occurred here (see below).

To recap, the SPLASH trial included a questionable crossover but pre-specified a model to correct for it. While this model did not favor their new product, the investigators conducted additional unplanned analyses and presented results favorable to the novel therapy.

Overall conclusion

SPLASH exemplifies what we too often encounter in modern oncology trials. While the drug is undoubtedly active and may potentially benefit some (or even many) patients, limitations in the trial design prevent it from answering the most critical questions: is this drug truly effective, when should it be used, and for whom? As a result, we are left with more questions than answers.

I would be curious as to how you, or Dr. Prasad, would design a study with this drug to answer the questions you think need to be answered.

This is getting so common. It seems half of oncology drugs are really of questionable benefit. I think I saw a research paper on that somewhere